A new brain-inspired AI method called Lp-Convolution enhances image recognition by dynamically reshaping CNN filters, combining biological realism with improved performance and efficiency.

Mimicking the Mind: A New Frontier in AI

Imagine a computer that doesn’t just process images but perceives them as we do—recognizing patterns, focusing on essential details, and adapting to new visual information with ease. This vision is becoming a reality thanks to a groundbreaking development in artificial intelligence.

Researchers from the Institute for Basic Science, Yonsei University, and the Max Planck Institute have introduced a novel AI technique called Lp-Convolution. This method brings machine vision closer to human visual processing, marking a significant stride in AI’s evolution.

Understanding Lp-Convolution: The Brain Behind the Tech

Traditional AI models, like Convolutional Neural Networks (CNNs), analyze images using fixed, square-shaped filters. While effective, this rigid approach doesn’t align with how our brains interpret visual stimuli.

Lp-Convolution changes the game by introducing a flexible filtering system that mirrors the human brain’s visual cortex. In our brains, neurons connect in a smooth, radial pattern, with connection strength varying gradually with distance. Lp-Convolution emulates this by allowing filters to adapt their shape and focus, enhancing the AI’s ability to process images more naturally and efficiently.

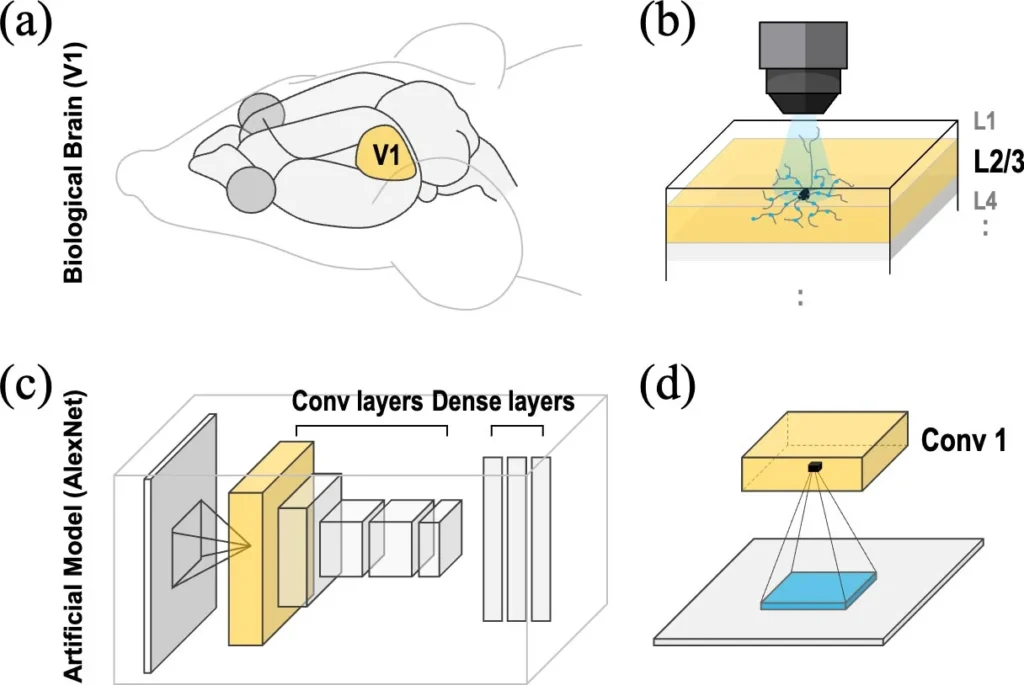

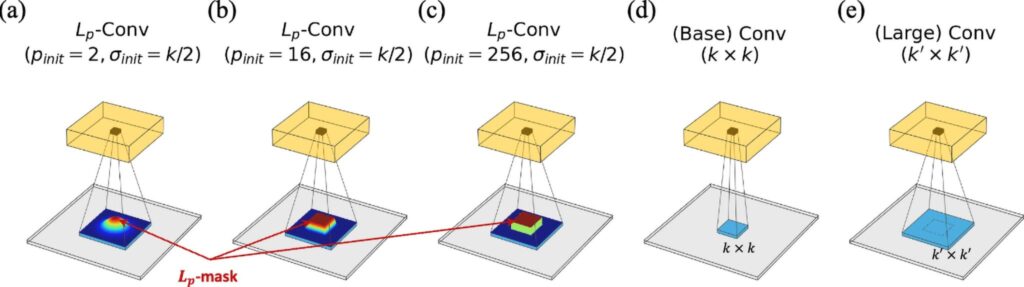

In the actual brain’s visual cortex, neurons are connected broadly and smoothly around a central point, with connection strength varying gradually with distance (a, b). This spatial connectivity follows a bell-shaped curve known as a ‘Gaussian distribution,’ enabling the brain to integrate visual information not only from the center but also from the surrounding areas. In contrast, traditional Convolutional Neural Networks (CNNs) process information by having neurons focus on a fixed rectangular region (e.g., 3×3, 5×5, etc.) (c, d). CNN filters move across an image at regular intervals, extracting information in a uniform manner, which limits their ability to capture relationships between distant visual elements or respond selectively based on importance. Credit: Institute for Basic Science

Real-World Applications: From Healthcare to Robotics

The implications of Lp-Convolution are vast

- Medical Imaging: Improved accuracy in detecting anomalies in scans, leading to earlier and more reliable diagnoses.

- Autonomous Vehicles: Enhanced object recognition and decision-making capabilities, contributing to safer navigation.

- Robotics: More responsive and adaptable machines capable of operating in dynamic environments.

- Consumer Electronics: Smarter cameras and devices that can better interpret and respond to user needs. Dailyscitech

The brain processes visual information using a Gaussian-shaped connectivity structure that gradually spreads from the center outward, flexibly integrating a wide range of information. In contrast, traditional CNNs face issues where expanding the filter size dilutes information or reduces accuracy (d, e). To overcome these structural limitations, the research team developed Lp-Convolution, inspired by the brain’s connectivity (a–c). This design spatially distributes weights to preserve key information even over large receptive fields, effectively addressing the shortcomings of conventional CNNs. Credit: Institute for Basic Science

A Step Toward Smarter, More Human-Like AI

This advancement isn’t just about better image processing; it’s about creating AI that understands and interacts with the world more like we do. By aligning machine vision with human perception, we’re paving the way for AI systems that can learn continuously, adapt to new situations, and perform complex tasks with greater autonomy. Dailyscitech

The Road Ahead: Open Access and Collaborative Growth

The research team has made their code and models publicly available, encouraging further exploration and development in the field. This open-access approach fosters collaboration, accelerating innovation and the integration of Lp-Convolution into various technologies.

Reference: “Brain-inspired Lp-Convolution benefits large kernels and aligns better with visual cortex” by Jea Kwon, Sungjun Lim, Kyungwoo Song and C. Justin Lee, 11 March 2025, ICLR 2025.

The study will be presented at the International Conference on Learning Representations (ICLR) 2025, and the research team has made their code and models publicly available: https://github.com/jeakwon/lpconv/

Funding: Institute for Basic Science