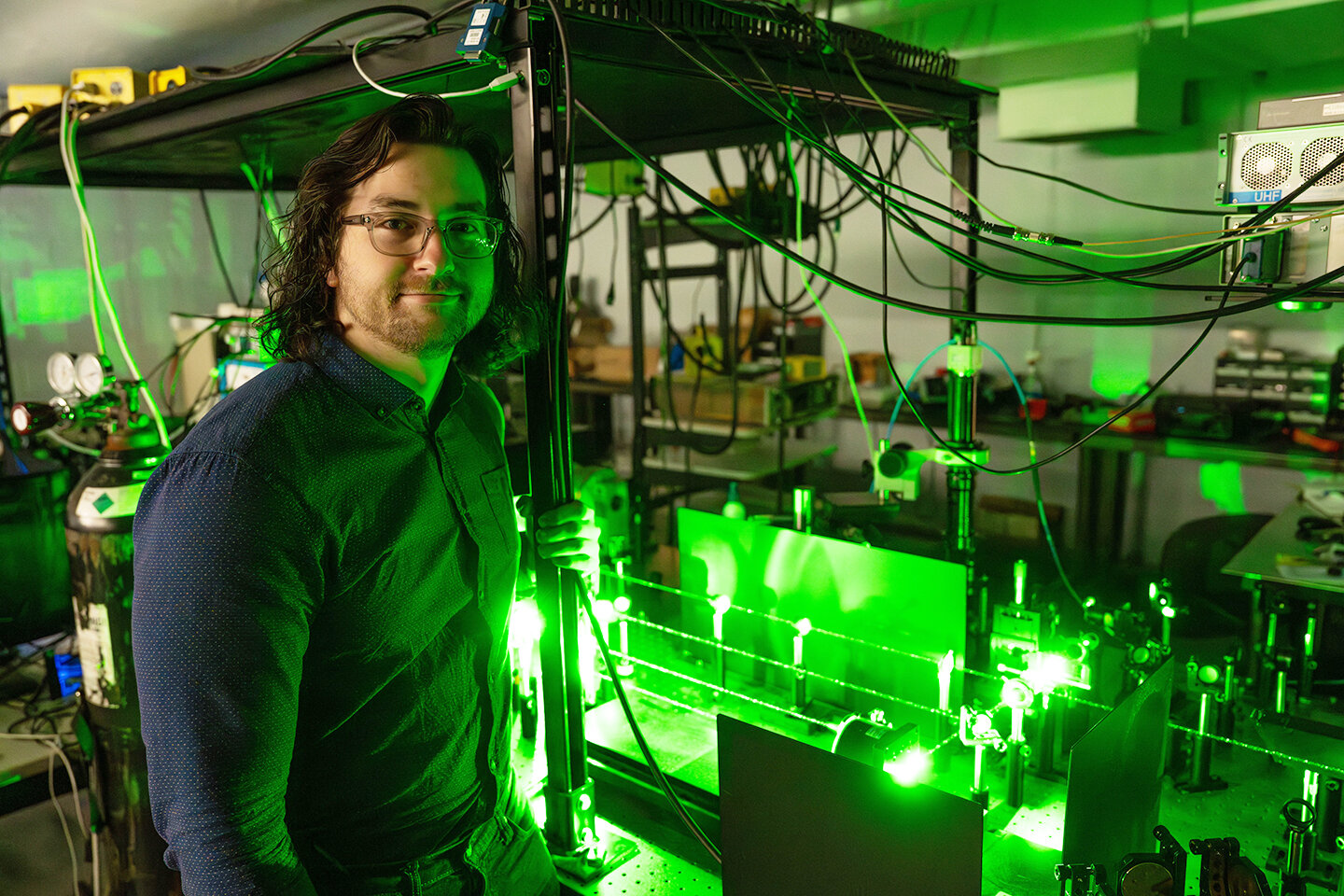

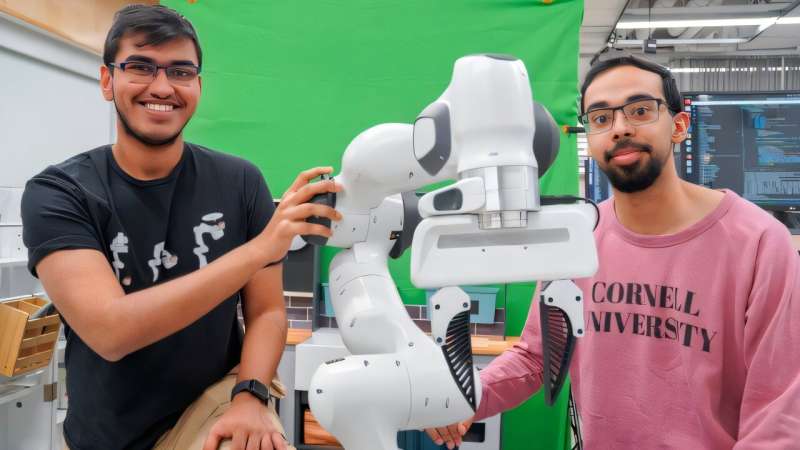

Kushal Kedia (left), a doctoral student in the field of computer science, and Prithwish Dan are members of the development team behind RHyME, a system that allows robots to learn tasks by watching a single how-to video. Credit: Louis DiPietro

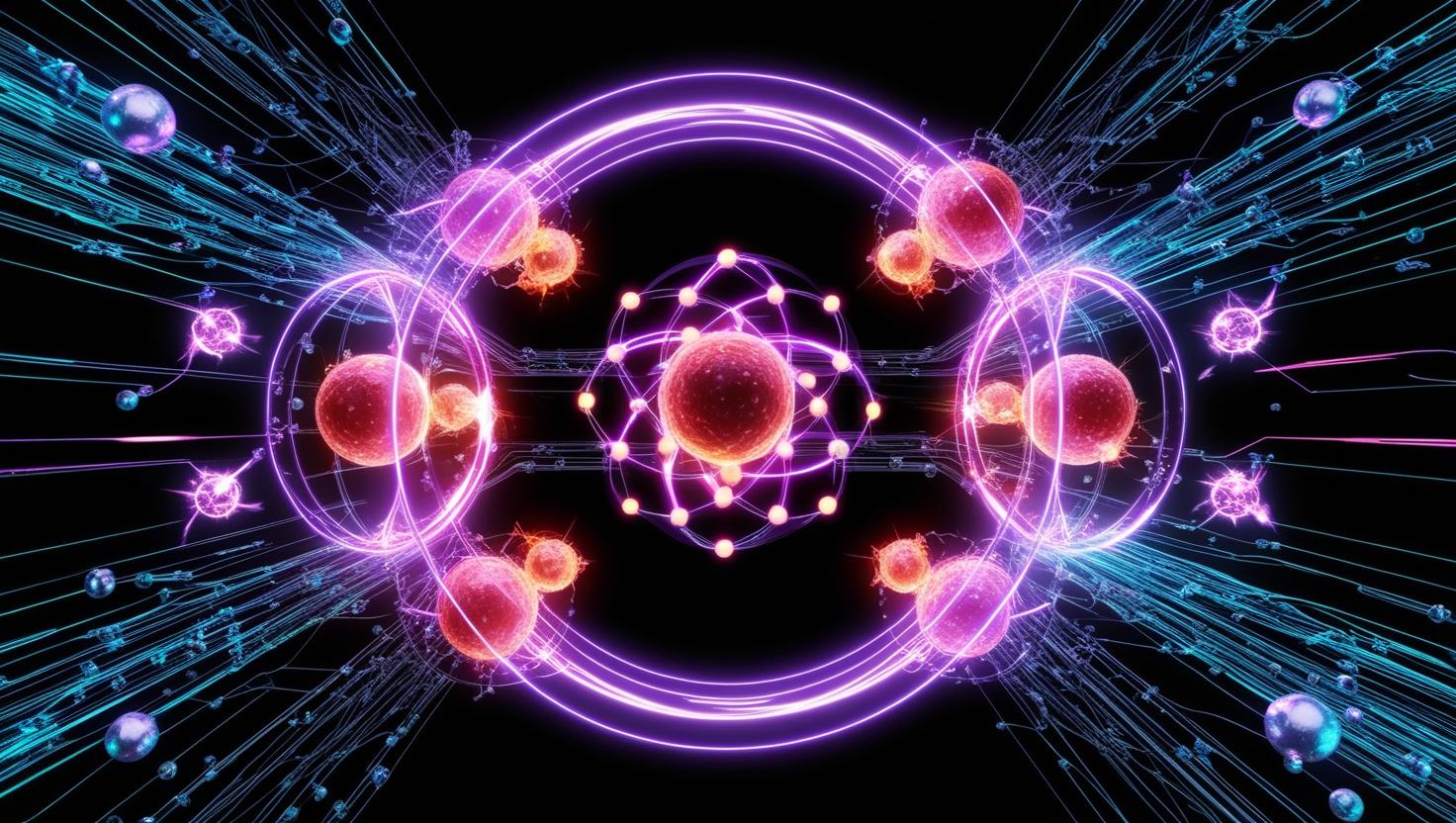

Imagine a robot that can learn to perform a task—like loading a dishwasher or folding laundry—simply by watching a single how-to video. Thanks to groundbreaking research from Cornell University, this scenario is becoming a reality. Introducing RHyME (Retrieval for Hybrid Imitation under Mismatched Execution), an AI-driven framework that allows robots to learn complex tasks by observing just one demonstration.

The Challenge: Teaching Robots Through Observation

Traditionally, programming robots to perform tasks requires extensive coding and numerous demonstrations. This process is time-consuming and limits the robot’s ability to adapt to new or unexpected situations. Moreover, slight differences between human demonstrations and robotic execution often lead to failures in task replication.

The Solution: RHyME’s Innovative Approach

Developed by Cornell researchers, RHyME addresses these challenges by enabling robots to learn from a single video demonstration, even if there are discrepancies between the human demonstrator’s actions and the robot’s capabilities. The system works by:

- Observation: The robot watches a human perform a task in a video.

- Retrieval: It accesses a database of prior experiences and related actions.

- Adaptation: The robot identifies similarities and adapts the observed behavior to its own abilities, filling in gaps where direct imitation isn’t possible.

This method allows the robot to generalize from a single example, reducing the need for extensive training data.

Real-World Applications and Future Implications

The RHyME framework has significant implications for the future of robotics:

- Home Assistance: Robots could quickly learn household chores by watching tutorial videos, making them more versatile in domestic settings.

- Industrial Automation: In manufacturing, robots could adapt to new assembly tasks with minimal reprogramming.

- Healthcare Support: Assistive robots could learn patient care routines by observing healthcare professionals, enhancing eldercare and rehabilitation services.

By reducing the reliance on extensive programming and training, RHyME accelerates the deployment of adaptable robots across various sectors.

Curious about the future of robot learning? How might this technology evolve to enable robots to learn more complex tasks or even teach each other?

Share your thoughts and join the conversation at DailySciTech.com.